Troubleshooting & Limitations

When asking a question about data within Slack, the link to the thread isn’t always the right one

When messages are not threaded, Dust points to the window of the channel message that represents the chunk whose URL is the first message. To optimize Dust synchronization and retrieval of Slack messages, we recommend formatting Slack threads as is: first message with the title and then create a thread.

I haven’t received a login, or I am having trouble logging in

If you experience issues logging in, please send a message to your workspace Admin, or our team [email protected] will investigate.

I try to create an agent using the Table Query tool, but it doesn't work

The Table Query tool can't automatically create a relation between tables. You need to explicitly set the relationships in your instructions. Clearly explain the structure of your data to the agent. Describe how tables are related and where to find specific information.

Table Format:

If you are using a CSV or a GSheet: Verify that the first line of your CSV or Google Spreadsheet is a header row made of columns and isn't made of raw text (i.e., guidance for reading the document) or isn't empty.

The Dust CSV parsing logic focuses on identifying the header row and the subsequent data rows. It doesn't have built-in functionality to recognize and handle introductory text or guidance information that precedes the actual CSV structure.

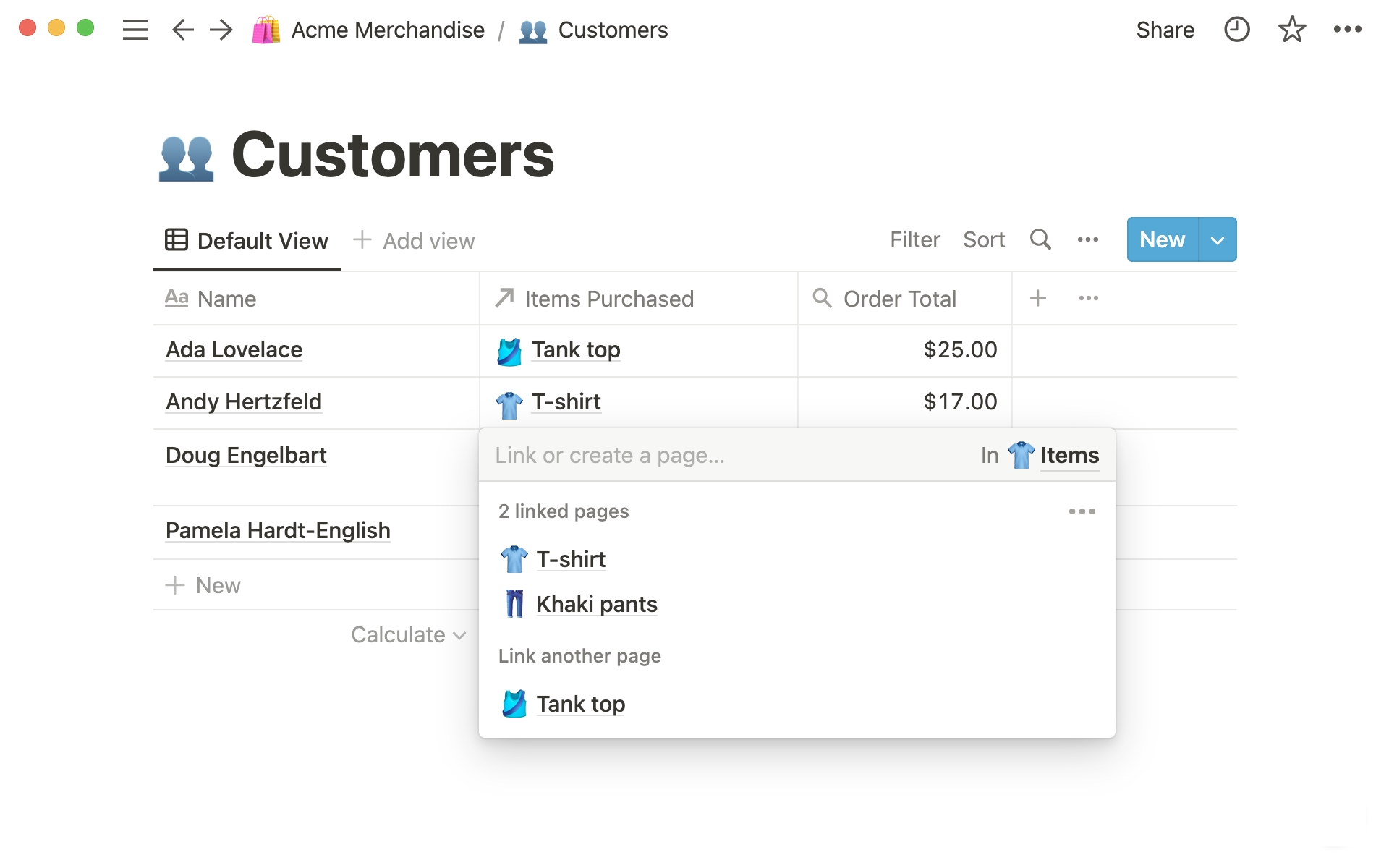

You are using a Notion Database: Table view in Notion doesn't mean Dust has access to all the databases your view is using. Ensure all related Notion databases are within Dust's indexed data scope. To identify related databases, look at the "Relations & rollups" properties in your Notion Database, like in the Item Purchased column in the picture below.

The agent is producing links that don’t work and falsely claiming something untrue. What’s going on?

- Agents are limited to text responses and can't browse the internet or use unapproved tools. They rely on approved data sources and Admin permissions.

- GPT4 and Claude are transformer-based models. They're trained to predict the next word in a sentence using probability, not grammar. For example, if you input 'chair', it predicts the next word based on patterns. But it doesn't really "understand" what a chair is. That's why the agent might sometimes make mistakes or "hallucinate".

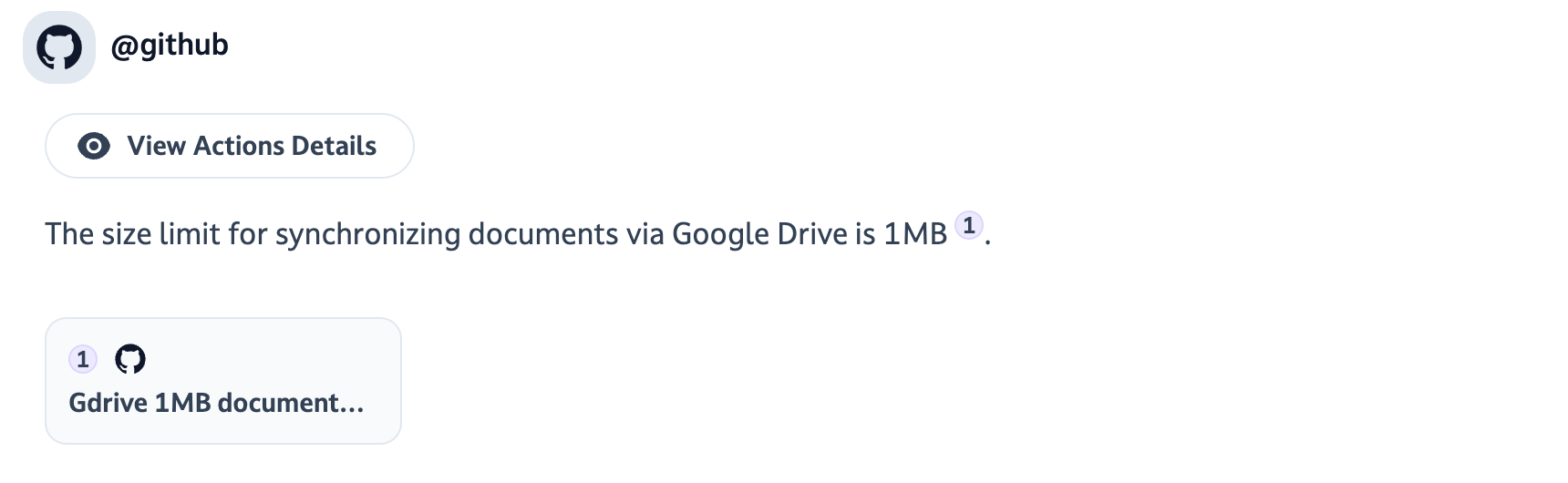

- About links in particular, we recommend NOT to ask for links in your prompts. Models tend to make them up. Use the citation numbers instead (purple numbers) ⬇️

- Learn more about how to optimize your prompts in How to optimize your agents

Why doesn't the agent remember what I said earlier in a conversation?

Agents have a limited memory for conversation context. They may forget earlier parts of a long chat, which can result in incorrect responses. As Dust and Large Language Models (LLMs) evolve, agents' ability to handle longer conversations will improve.

Can Dust remember and incorporate my feedback in the future?

Agents have a short memory and don't recall past conversations.

You can't "train" the agent to answer better talking with it, but you can improve the agent's performance by:

- iterating and continuously improving your agent's prompt: the more you iterate and make it tailored to your needs, the better!

- To give agents guidance on your writing or thinking style, include a "constitution"—a list of rules and principles—in the agent's instructions for instance.

- You can also add examples of good answers in your prompt.

- improving your data sources: the clearer your documentation is, the more accurate your agent's answers will be.

Also, please note that we do not offer the possibility to use Agents for customer-facing tasks. They can only be used by you and your colleagues who are Dust members.

Is Dust down?

Check and subscribe to https://dust.statuspage.io/ for real-time status updates.

Updated about 1 month ago